Our AI text to speech technology delivers thousands of high-quality, human-like voices in 32 languages. Whether you’re looking for a free text to speech solution or a premium voice AI service for commercial projects, our tools can meet your needs

Discover how to seamlessly integrate AI voices into video game development, enhancing character realism and streamlining localization

Creating an immersive video game experience is an art form that demands creativity, technological innovation, and—crucially—time. First-person shooter game Duke Nukem Forever famously took 14 years to produce.

Among the challenges faced by video game developers, voiceover recording and localization are particularly time-consuming hurdles.

Thankfully, there’s a potential game changer: AI voice generation. With the capacity to generate professional-quality voiceovers in a fraction of the time, AI voice technology offers a streamlined, cost-effective alternative to the traditional voice acting industry.

AI voices are algorithmic programs trained on vast libraries. Using extensive datasets and employing machine learning techniques, these voices not only synthesize new vocal expressions but also clone existing ones, allowing for a broad range of adaptability and customization.

At the core of this innovation is voice cloning, a process that begins with a human speech sample. Through textual input, it reproduces the original voice with remarkable accuracy, capturing the unique inflections, intonations, and nuances of human speech. This technology has proven to be especially beneficial in enhancing realism for video game NPCs, ensuring that no two characters sound alike.

The technical foundation of AI voices includes Automatic Speech Recognition (ASR) technology, which allows voice generators to recognize and transcribe in-game dialogues. Additionally, Natural Language Processing (NLP) enables these AI-generated voices to comprehend the context and intent behind words, enriching interactions with a life-like layer of understanding.

Voice synthesis employs neural networks and deep learning models to generate human-like speech from textual input. Although text-to-speech remains the most common use case for gaming, innovations such as ElevenLabs' speech-to-speech technology promise enhanced modulation and fine-tuning capabilities.

Speech-to-speech technologies will improve AI voices' precision, realism, and overall versatility.

AI voices are helping game developers at all stages of game creation, from pre-production to distribution. For example, voice cloning can be used to generate NPC voices, enabling greater expression for characters which can typically sound pretty robotic. Meanwhile, voice libraries can be used to save developers' time when sourcing voices.

In July 2023, UK game studio Magicave partnered with ElevenLabs to transform narration for its upcoming game, Beneath the Six. Currently in development, the game will feature in-game narration by Tom Canton, known for Netflix’s hit show The Witcher.

Magicave and ElevenLabs’ partnership will utilize text-to-speech models with context-based delivery capabilities to generate fresh, entirely individualized AI narration. Thanks to high compression, the AI narration can fit seamlessly into the game, while offering an infinitely more creative experience for players.

Beneath The Six’s AI narrator is an exciting sign of things to come for video games leveraging AI voice technology, where the artistry of even the busiest actors can enliven any video game story.

Choosing an AI voice generator depends on a game’s specific needs. Let’s take a look at three of the top AI voice generators currently on the market.

ElevenLabs offers realistic, creative voice generation through three key tools: a voice library, an intelligent text-to-speech model that generates synthetic character voices, and AI dubbing, which smoothly translates character voices into tens of languages.

ElevenLabs’ pros are its language capabilities, realism, and fine-tuning capabilities. Voices generated by TTS or dubbing are designed to mimic the natural pauses, intonation, and emotional inflection of human speech, ensuring life-like characters.

Replica Studios was ahead of the game in integrating AI voices and had a suite of useful software available. In 2023, Replica announced Smart NPCs, a game engine-compatible plug-in for speedily generating hundreds of NPC voices for video games.

On the good side, Replica’s software is true to life, and trusted by a range of powerful partners. Multiple export formats ensure compatibility with any game, and Replica is quick to highlight the importance of ethics and security to their studios.

However, with partners like Google, Replica may be too pricy for some indie game developers. The software is also not that intuitive to those new to integrating AI into their games.

PlayHT boasts quality AI voice cloning and Text-To-Speech (TTS) designed for the film, animation, and game industries. With a wide range of languages (142) and unique features like Multi-Voice tools and Custom Pronunciations, PlayHT offers exciting prospects to game developers looking to integrate AI into their workflow.

Customization options allow developers to generate synthetic character voices that have unique levels of emotional expression, as well as catering to a diversity of dialects, speech styles, and intonations.

However, PlayHT is still a Beta model and often generates inaccuracies, which can hold up developers hoping to utilize AI voice-generation tools. It’s also one of the most expensive software out there ($31/month). This prices out individuals or indie developers.

When integrating AI voices into video games, developers must carefully balance the pros with the cons.

Integrating AI voices in video game development marks a huge step forward. It solves the complex challenges of recording voices and making games fit for players from all over the world.

Now, developers can use AI to populate games with life-like, captivating characters with their own emotions, making games far more engaging and immersive for players.

By intelligently using this technology, game makers can overcome hurdles like making AI voices sound natural and making sure voice actors are treated fairly. This way, everyone wins—the people making the games, the voice actors, and the gamers playing them.

Want to try ElevenLabs' AI voice generation capabilities for yourself? Get started here.

Our AI text to speech technology delivers thousands of high-quality, human-like voices in 32 languages. Whether you’re looking for a free text to speech solution or a premium voice AI service for commercial projects, our tools can meet your needs

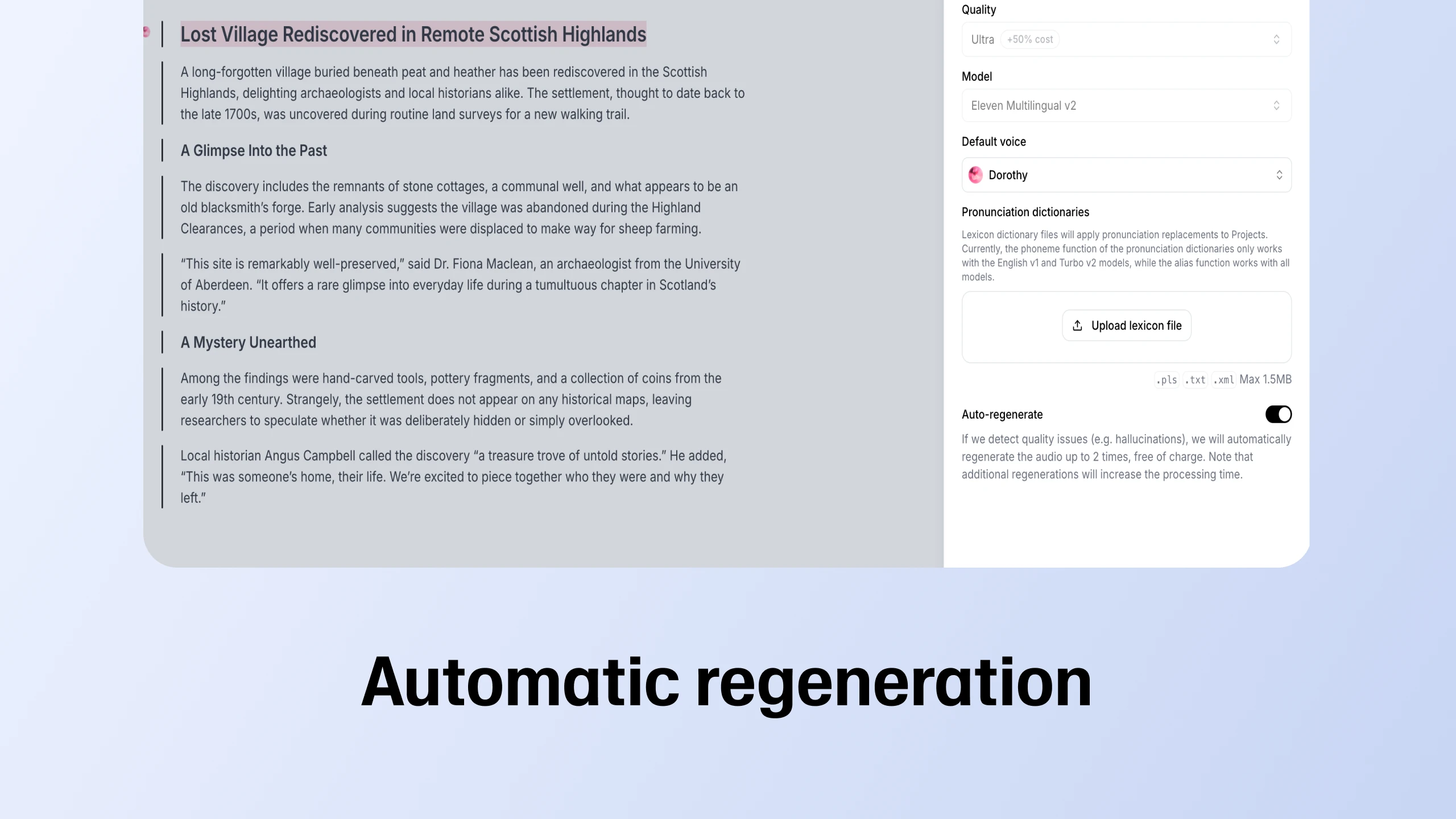

Our long form text editor now lets you regenerate faulty fragments, adjust playback speed, and provide quality feedback

Developers brought ideas to life using AI, from real time voice commands to custom storytelling